The old egg seller, his eyes weary and hands trembIing, continued to sell his eggs at a loss. Each day, he watched the sun rise over the same cracked pavement, hoping for a miracle. But the world was indifferent. His small shop, once bustling with life, now echoed emptiness.

The townspeople hurried past him, their footsteps muffled by their own worries. They no longer stopped to chat or inquire about the weather. The old man’s heart sank as he counted the remaining eggs in his baskets. Six left. Just six. The same number that the woman had purchased weeks ago.

He remembered her vividly—the woman with the determined eyes and the crisp dollar bill. She had bargained with him, driving a hard bargain for those six eggs. “$1.25 or I will leave,” she had said, her voice firm. He had agreed, even though it was less than his asking price. Desperation had cIouded his judgment.

Days turned into weeks, and weeks into months. The old seller kept his promise, selling those six eggs for $1.25 each time. He watched the seasons change—the leaves turning from green to gold, then falling to the ground like forgotten dreams. His fingers traced the grooves on the wooden crate, worn smooth by years of use.

One bitter morning, he woke to find frost cIinging to the windowpane. The chill seeped through the cracks, settling in his bones. He brewed a weak cup of tea, the steam rising like memories. As he sat on the same wooden crate, he realized that he could no longer afford to keep his small shop open.

The townspeople had moved on, their lives intertwined with busier streets and brighter lights. The old man packed up his remaining eggs, their fragile shells cradled in his weathered hands. He whispered a silent farewell to the empty shop, its walls bearing witness to countless stories—the laughter of children, the haggling of customers, and the quiet moments when he had counted his blessings.

Outside, the world was gray—a canvas waiting for a final stroke. He walked the familiar path, the weight of those six eggs heavier than ever. The sun peeked through the clouds, casting long shadows on the pavement. He reached the edge of town, where the road met the horizon.

And there, under the vast expanse of sky, he made his decision. With tears in his eyes, he gently placed the eggs on the ground. One by one, he cracked them open, releasing their golden yoIks. The wind carried their essence away, a bittersweet offering to the universe.

The old egg seller stood there, his heart as fragile as the shells he had broken. He closed his eyes, feeling the warmth of the sun on his face. And in that quiet moment, he whispered a prayer—for the woman who had bargained with him, for the townspeople who had forgotten, and for himself.

As the sun dipped below the horizon, he turned away from the empty road. His footsteps faded, leaving behind a trail of memories. And somewhere, in the vastness of the universe, six golden yolks danced—a silent requiem for a forgotten dream.

Once One of the Most Handsome Men, This Hollywood Legend, 88, Lives Reclusively after a Stroke amid His Kids’ Bitter Feud

Following a stroke, a well-known Hollywood celebrity who was formerly regarded as one of the most attractive men leads a reclusive existence. His children had been at odds for a long time during his health scare.

This attractive French actor,88, who was once praised as one of the most beautiful men in the world, leads a very different life now that he is no longer in the limelight of Hollywood.

After sadly suffering a stroke in 2019, the “Flic Story” artist, who is aware of how “handsome” he is, lives a reclusive life at his house. This happened a few weeks following his honorary Palme d’Or acceptance in Cannes, France.

His mansion is situated behind a magnificent stone wall that stretches 2.4 miles (2.3 km) and is part of the expansive estate known as La Brûlerie. It is situated in the Loreit department of central France, close to the Douchy-Montcorbon commune, at a distance of 86.99 miles (140 km) southeast of Paris.

Sources claim that this is not just the actor’s house but also the location of his dream burial, next to a chapel on the grounds of a cemetery he constructed. More than thirty of his cherished hounds are laid to rest in this cemetery.

Despite having France as his home base, sources indicate that the reclusive divides his time between his Douchy home, his apartment in Geneva, and his workstation in Paris.

The French sensation has been handling a tense family matter in addition to choosing his final resting place. His three children are at conflict with one another.

Based on their father and his possessions, his two sons and daughter have engaged in public arguments, leveled allegations, and pursued legal actions. The public nature of their arguments has brought the actor’s kids a lot of media attention.

So much so that Christophe Ayela, their father’s attorney, has made an effort to mediate a ceasefire between them. “It must end, and everyone must become calm. That’s enough for now, reprimanded Christopher.

The fact that the “Purple Noon” actor, whose kids attest to this, is aware of their argument and has made it quite evident that he has a favorite child adds even more nuance to the family conflict.

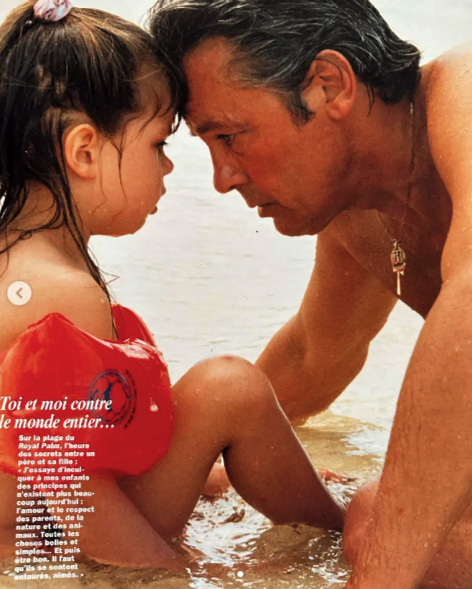

He had earlier said, “I have a daughter who is the love of my life, maybe a little too much in comparison to the others.”

In 2008, he claimed, “I have said I love you to no other woman so often.” Observant viewers speculate that the father may see his sons as competitors, which could explain their tense relationship. This theory is supported by the father’s own remarks and other observations.

The actress’s kid has made public her intense affection for her father, much like her devoted father. She recently sent a heartfelt homage to him on Instagram, providing followers with an update on the actor’s health.

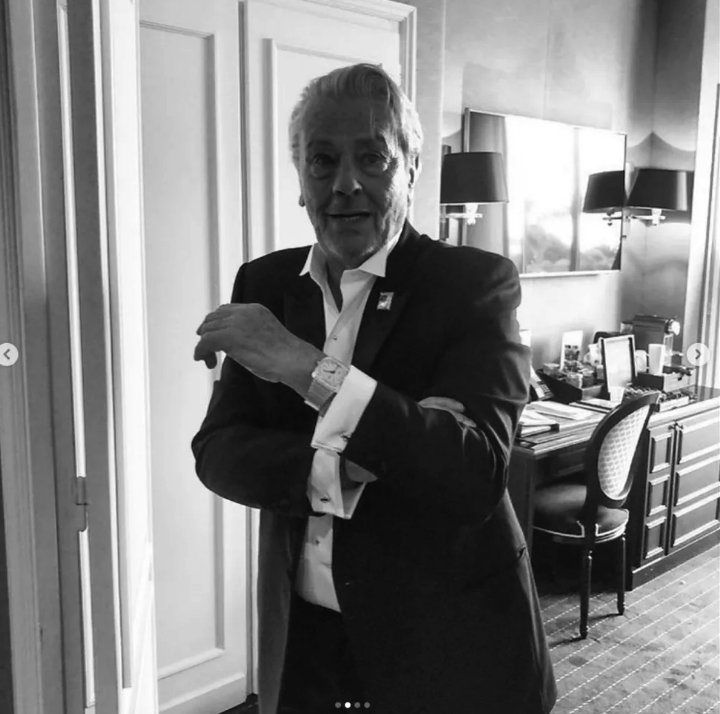

She wrote a touching note in French and included it with a photo of her father. That caption says, “Friday morning I took a picture of my dad,” in English. for myself. A remembrance of our times. Breakfast with him fills me with unending gratitude. A singularly lovely moment.

She continued by praising his looks, describing him as “handsome” and emphasizing his “vivid” and “fighting” attitude. “My personal eternal,” she penned.

“I showed the image to him. As his audience who is interested in him, I asked him if I may share it with you. Thus, it is here with his consent. “Don’t worry,” he responds to your concerns. #love,” the actor’s daughter said.

It’s none other than Alain Delon, the legendary French casanova about whom admirers have been worrying and who has been leading a secluded life. Many of Alain’s admirers responded to his daughter Anouchka Delon’s Instagram photo by leaving comments on the platform.

As always, he is stunning and gorgeous. Please remain by his side; he needs you more than anybody. I know you adore your father and are very protective of him, a fan exclaimed. Actor Gilles Marini, who is also French, said, “Remain near.brimming with affection. Nothing more is important.

Even though Anouchka and her brothers, Anthony and Alain-Fabien Delon, have not always agreed on everything, they both agree that their father’s financial and medical needs must be met.

According to a French news source earlier this month, Alain’s children banded together in March to demand that their father be put under a “reinforced curatorship.” Alain was previously placed under judicial protection for “medical monitoring” prior to this action.

According to the article, their request was granted as of April 4. This implies that a “curator” will be designated to supervise Alain’s finances and act, effectively, on his behalf with regard to matters pertaining to his possessions and, occasionally, his healthcare needs.

Nobody has confirmed the identity of this curator as of yet. It’s unclear if it will be Hiromi Rollin—who the news source called Alain’s “lady in waiting”—or one of his children.

What will happen to Alain’s business, Alain Delon International Diffusion SA, of which he is the President and Anouchka is the Vice President, is another concern. As the curatorship request has been approved, Alain will no longer be able to make decisions in that role.

Nevertheless, Alain is more concerned with the here and now than the minutiae. He revealed in a 2021 interview that he wanted to make one last picture, which he believes has the potential to be his best to date.

The “Borsalino” actor said, “In my life what I loved most was being Alain Delon, the actor Alain Delon,” to end the conversation.

“Observe Purple Noon and Rocco [And His Brothers]!Every woman was enamored with me. Alain described himself as an attractive performer in a prior statement. “From when I was 18 till when I was 50.”

Alain is said to have discovered his attraction to women in the 1950s when on a trip to Saint-Germain-des-Prés with a buddy. “I became aware that everybody was staring at me. Women started to inspire me. To them, I owe everything. Alain said, “They were the ones who motivated me to look better than everyone else.”

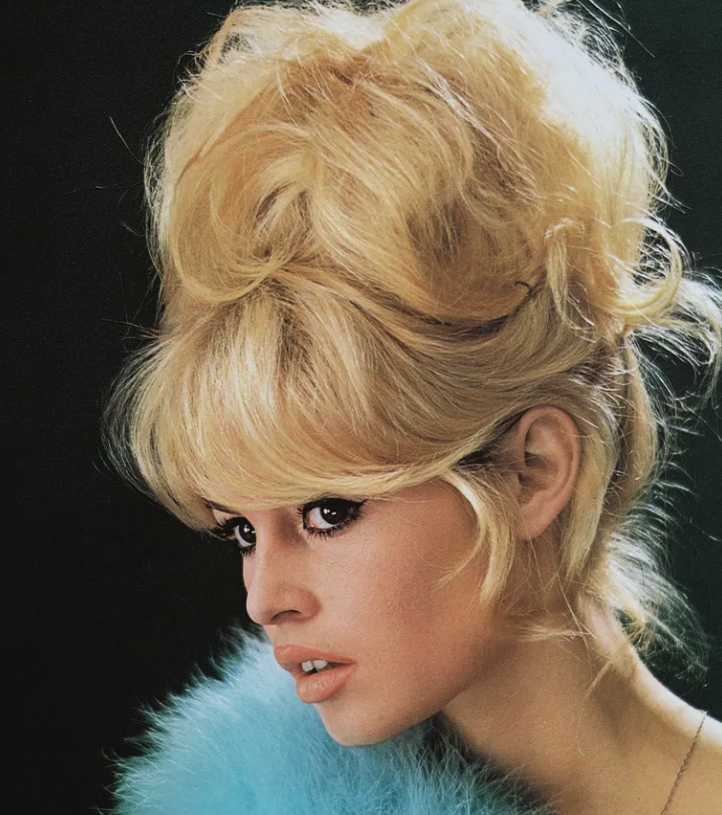

Alain made it his mission to “look better than anyone else,” going so far as to claim the title of “most seductive man in cinema” at the age of 25, and he was even compared to Brigitte Bardot in terms of appearance. One of the biggest “It” girls in the history of film, the French star is widely recognized.

Her famous roles in many silver-screen movies have earned her recognition and admiration. Playboy, a popular platform for showcasing stunning celebrities, had elevated the French blonde beauty to the top of the list of the 20th century’s most attractive female stars.

She is even regarded by certain media sources as the greatest “It” girl of all time. In addition to her attractive appearance, Brigitte is well-known for her pouffy lips. She ranked fourth on Playboy’s list of the sexiest female stars.

Her seductive confidence and alluring personality also earned her the title of most watched star in her native nation. In addition to her accomplishments as an actor and general entertainment, Brigitte has developed a strong reputation as an enthusiastic supporter of animal rights.

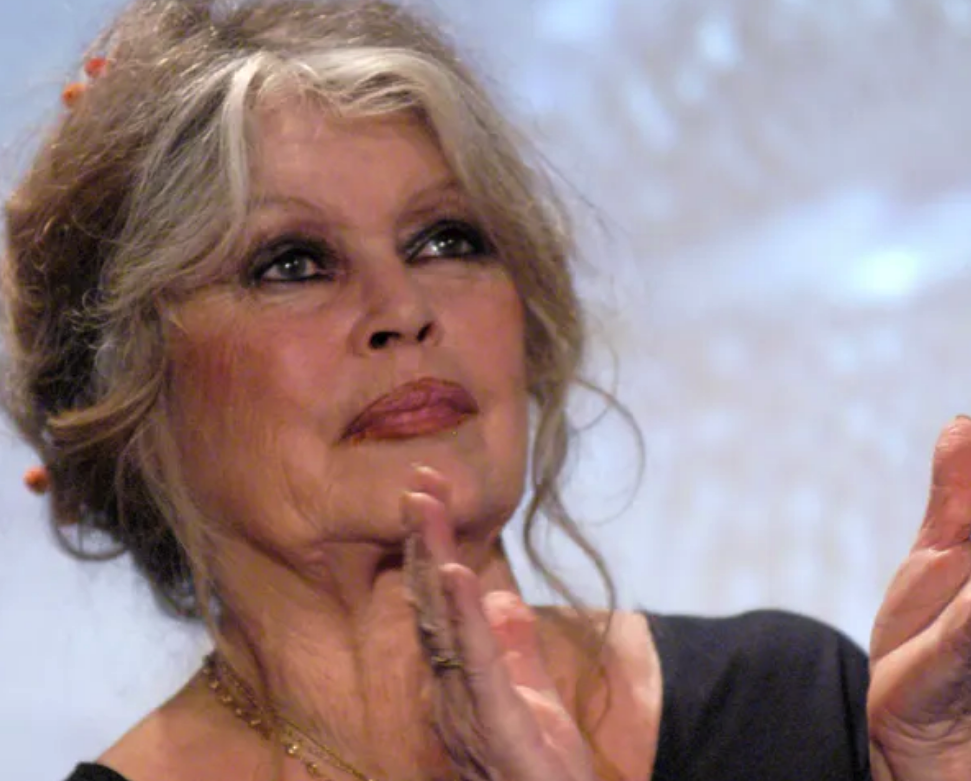

Regarding her private life, the well-liked celebrity, better known by her stage as BB, is a mother of one child and has been wed to Bernard d’Ormale for 31 years. Media sources claim that the pair married in secret in August 1992, inviting just a small number of friends to share in their big day.

Since then, the couple has been happily married. When Brigitte and Bernard got married for the first time, acquaintances of the “Contempt” singer informed a news outlet that the abrupt and covert marriage had made her happier than she had been in a long time.

It’s interesting to note that Brigitte’s friends weren’t sure she would get married again after her first spouse died. Nevertheless, the couple lived together in Brigitte’s opulent ten-bedroom mansion in Saint-Tropez after being married in a charming tiny wooden chapel in Norway.

Bernard has supported Brigitte ever since they first met, particularly during her health problems. Brigitte’s knight in shining armor promptly reassured the extremely alarmed audience that she was okay when French newspapers announced for the first time in 1992 that their adored celebrity had supposedly overdosed on sedatives while at home.

“Brigitte was overwhelmed with fatigue and took too much medication to go to sleep,” he clarified.After a few hours, she was fine and had not had her stomach pumped.”

Bernard’s claim that his wife was okay was further corroborated by a representative for the clinic where Brigitte was brought. Years after her sedative scare, Brigitte was confronted with yet another health issue.

Bernard attested to Brigitte’s breathing difficulties earlier this year. Fortunately, first responders came to her aid right away, gave her oxygen, and stayed with her to make sure everything was alright.

Bernard cited an intense heatwave that was sweeping through Europe at the time to support his explanation that his wife’s respiratory issues were age- and weather-related. It seems that their La Madrague home’s air conditioning system was not operating at its best.

Brigitte had assured the public that she was fine, but a news source had said that she had remained in the intensive care unit. But in a handwritten message, the “A Very Private Affair” star corrected the record, saying, “I want to reassure everyone.” I’m doing great right now. The disease that I contracted was a source of scandal for the press.

Leave a Reply